I was taking a look at one of the most famous chatbots, DoNotPay, today to prep some thoughts on lawtech for a talk I am giving next week. I thought I would try it out. When asked what I could be helped with I typed in parking ticket and picked the medical emergency option and quickly got directed to a chatbot that could help with parking tickets. I was asked a series of simple questions which included:

where was the appointment (hospital)

who was I taking (I said my wife)

what was the nature of the medical emergency (pregnancy)

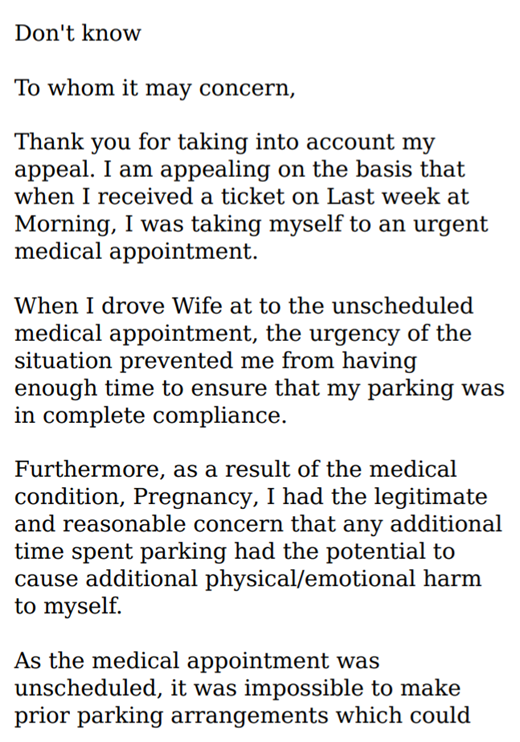

Very quickly, a draft letter/email was produced which I am then free to adopt to deal with my problem. It’s done in an instant. And it said this (I have only excerpted the first few paragraphs by way of illustration)…

Apart from the grammar problems, which I am not so worried about, the Bot did a very nice and super-quick job of providing a draft appeal letter, basically asking the administrator of the fines to respond decently to my extenuating circumstances. Perhaps the main point of importance is I’d use it again to get myself going if I had a real problem, but there are two other points worth noting.

One is there is an obvious factual error. A very simple statement of facts from me to the chatbot is mangled. I can easily correct it, but I wonder if more subtle slips like that would generally be picked up by non-vigilant consumers of the product. I don’t think the fact-mangling was user-error by me. I think the Bot made a clear mistake. If I am right, was it negligently built? Who is responsible, and how far does that depend on me being able to read and correct for the mistake?

The second point is that, at least arguably, it has manufactured a ‘defence’ which is false. I said nothing to say I was prevented from parking due to the pressure of the situation. It might be true, it might not (I made the situation up). Again, I might correct that mistake if I were so inclined, but the Bot is leading me down a path here, isn’t it? If the Bot’s statement is unfounded, and /or untrue, then it is encouraging me to lie, isn’t it? It is not too difficult to imagine bot-users rationalising the approach as simply a lawyerly tactic which is acceptable, rather than dishonest. Who takes responsibility for that?

It must have been a mistake as it otherwise describes you as pregnant.

>

I’m sorry to see that you have unfollowed me Richard. If it was because of a tweet I sent last week of a guardian story headlined ‘Professors eat their young’ I did delete it fairly swiftly (I was going to comment negatively on it but decided to just delete). We have followed each other for a couple of years or so now and it’s very rare for an academic to change their mind about following me. Perhaps there is another reason that I’m not aware of. Either way, as I say, I’m sorry to see you go )-;

Gary, I was unfollowing people on mass to trim my feed a bit. I was looking at profiles quickly, seeing how relevant they were, and not making personal judgments about individuals or tweets! Your profile was maybe not clear, very general, or I might have done it by mistake. I’ve refollowed, I think.